【中美创新时报2024 年10 月 1 日编译讯】(记者温友平编译)波士顿最著名的人工智能初创公司之一 LiquidAI 周一首次披露了其软件的工作原理——以及它如何能够超越 ChatGPT 等流行的人工智能应用程序。该公司还让任何人都可以通过互联网访问该软件以进行进一步测试。《波士顿环球报》记者Aaron Pressman对此作了下述报道。

在周一发布的博客文章和白皮书中,LiquidAI 声称其软件可以像竞争对手一样快速生成答案,同时需要更少的内存和计算能力,从而节省资金并消耗更少的能源。

该公司将其技术称为“液态基础模型”,以与 OpenAI、谷歌的 Gemini 和 Meta 的 Llama 等众多 ChatGPT 所依赖的“大型语言模型”形成对比。在一项流行的基准测试中,LiquidAI 表示,其最大、功能最强的模型可以与 7 月份发布的 Meta 的 Llama 3.1-70B 模型的性能相当,但所需的计算机内存要少得多,因为它只有其大小的六分之一左右。

“与目前的 GPT 模型相比,我们的质量、成本效益和能效显著提高,”LiquidAI 首席执行官兼联合创始人 Ramin Hasani 在博客文章中表示。“我们的创新架构使我们能够超越更大的传统模型,提供既经济高效又可扩展的强大解决方案。”

LiquidAI 成立于 2023 年 3 月,由 Hasani、首席技术官 Mathias Lechner 和首席科学官 Alexander Amini 与麻省理工学院教授 Daniela Rus 共同创立,后者负责管理该大学的计算机科学和人工智能实验室。

Hasani 和 Lechner 研究了一只小蛔虫的大脑,以此为灵感创建了一种新型计算机神经网络。他们没有以拥有约 860 亿个神经元的人脑为模型,而是使用了只有 300 个神经元的蛔虫大脑。

从本质上讲,LiquidAI 模型中的数字“神经元”比大型语言模型中的“神经元”运行方式更灵活。该公司表示,该模型可以生成文本或视频,或用于其他 AI 任务。

在博客文章中,LiquidAI 表示,它已经开发了不同大小和功能的模型,其中一些可以在智能手机上运行,而另一些则需要数据中心中更大的服务器。该公司表示,这些模型使用各种行业基准与少数同类竞争对手进行了测试,结果达到或超过了竞争对手的大型语言模型的性能。这些说法并未得到《波士顿环球报》的独立核实。 (LiquidAI 没有将其模型与 OpenAI 的 GPT 模型进行对比测试,后者规模大得多,需要数亿美元甚至更多才能创建。)

从周一开始,人们可以通过 LiquidAI 的聊天机器人访问这些模型,或者尝试通过该公司的网站和其他一些云服务链接自己的软件。该公司还表示,客户将能够在各种硬件上运行其软件,包括苹果、Nvidia、Advanced Micro Devices 和高通的芯片。

东北大学体验式人工智能研究所执行主任 Usama Fayyad 表示,到目前为止,LiquidAI 已经通过几种方式展示了其软件的成功,但需要证明它可以解决各种大规模挑战,特别是在生成文本和更复杂的数学或编码问题方面。

“他们已经完成了早期的突破性工作,这是一个很有前途的方向,”Fayyad 说,他在加入这所大学之前在硅谷从事人工智能和数据科学工作了几十年。“现在的问题是……他们能否证明它在许多条件下可靠地应用于许多任务。”

去年 12 月,LiquidAI 从知名投资者那里筹集了 3750 万美元的种子资金,这些投资者包括波士顿凯尔特人队联合老板 Stephen Pagliuca 和旧金山风险投资公司 OSS Capital。据传,该公司将在下一轮融资中筹集更多资金。

该公司计划于 10 月 23 日在麻省理工学院的克雷斯基礼堂公开演示其软件。

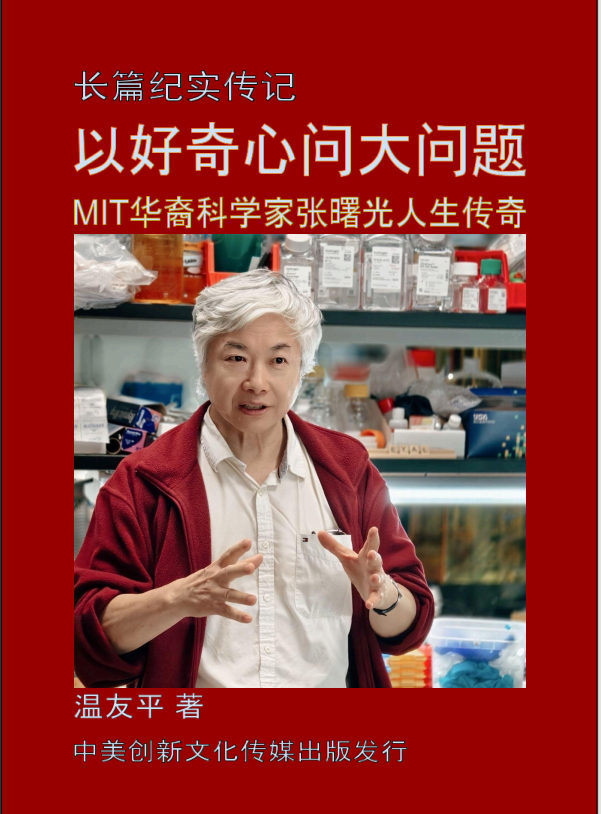

题图:人工智能初创公司 LiquidAI 发布了其软件,供互联网上的任何人使用。创始人从左到右依次为首席执行官 Ramin Hasani、麻省理工学院教授兼技术顾问 Daniela Rus、首席科学官 Alexander Amini 和首席技术官 Mathias Lechner。John Werner

附原英文报道:

In AI arms race, Boston’s LiquidAI claims to have a cheaper, more efficient app

The MIT spinoff is releasing its software for use by outsiders

By Aaron Pressman Globe Staff,Updated September 30, 2024

Artificial intelligence startup LiquidAI released its software for use by anyone over the internet. The founders are, from left, chief executive Ramin Hasani, MIT professor and technical adviser Daniela Rus, chief scientific officer Alexander Amini, and chief technical officer Mathias Lechner.John Werner

One of Boston’s most prominent artificial intelligence startups, LiquidAI, on Monday disclosed how its software works for the first time — and how it might be able to outperform popular AI apps like ChatGPT. The company is also making the software accessible to anyone over the internet for further testing.

In a blog post and white paper released on Monday, LiquidAI claimed that its software could generate answers as quickly as competitors while requiring less memory and computing power, thus saving money and consuming less energy.

The company dubbed its technology “liquid foundational models” to contrast with the “large language models” underlying ChatGPT from OpenAI, Google’s Gemini, and Meta’s Llama, among many others. On one popular benchmark test, LiquidAI said its largest and most capable model could equal the performance of Meta’s Llama 3.1-70B model released in July while needing much less computer memory, since it is only about one-sixth the size.

“We’ve markedly improved quality, cost-effectiveness, and power efficiency compared to current GPT models,” LiquidAI chief executive and cofounder Ramin Hasani said in the blog post. “Our innovative architecture allows us to outperform larger traditional models, providing powerful solutions that are both cost-effective and scalable.”

LiquidAI was founded in March 2023 by Hasani, chief technical officer Mathias Lechner, and chief scientific officer Alexander Amini, with MIT professor Daniela Rus, who runs the university’s Computer Science and Artificial Intelligence Laboratory.

Hasani and Lechner studied the brain of a tiny roundworm as inspiration for creating a new kind of computer neural network. Instead of modeling the network on the human brain, which has about 86 billion neurons, they used the roundworm’s brain, which has just 300 neurons.

Essentially, the digital “neurons” in LiquidAI’s model operate in a more flexible way than the “neurons” in large language models. The model can generate text or video or be used for other AI tasks, the company said.

In the blog post, LiquidAI said it had developed models of varying size and capability, with some able to run on smartphones and others requiring larger servers in a data center. The models were tested against a handful of comparable rivals using a variety of industry benchmarks, where they met or exceeded the performance of competing large language models, the company said. Those claims were not independently verified by the Globe. (LiquidAI did not test its models against OpenAI’s GPT models, which are vastly larger and required hundreds of millions of dollars or more to create.)

Starting on Monday, people can access the models through LiquidAI’s chatbot or try linking their own software via the company’s website and some other cloud services. The company also said customers would be able to run its software on a variety of hardware, including chips from Apple, Nvidia, Advanced Micro Devices, and Qualcomm.

So far, LiquidAI has shown success using its software in a few ways but will need to prove it can attack a variety of large-scale challenges, particularly around generating text and more complicated math or coding problems, Usama Fayyad, executive director of the Institute for Experiential Artificial Intelligence at Northeastern, said.

“They’ve done the early breakthrough work and it’s a promising direction,” said Fayyad, who worked on AI and data science in Silicon Valley for decades before joining the university. “Now the question is … can they show it applying to many tasks, reliably under many conditions.”

Last December, LiquidAI raised $37.5 million in seed funding from high-profile investors led by Boston Celtics co-owner Stephen Pagliuca and San Francisco venture capital firm OSS Capital. The company is rumored to be raising considerably more for its next funding round.

The company is planning a public demonstration of its software on Oct. 23 at MIT’s Kresge Auditorium.