在联邦政府停滞不前的情况下,美国各州开始对人工智能进行监管

【中美创新时报2024 年 6 月 11 日编译讯】(记者温友平编译)上个月,加州立法者提出了约 30 项旨在保护消费者和就业的人工智能措施,这是迄今为止监管新技术的最大努力之一。

《纽约时报》记者Cecilia Kang对此作了下述报道。

这些法案寻求在全国范围内对人工智能进行最严格的限制,一些技术专家警告说,这可能会扼杀整个类别的工作,使选举因虚假信息而陷入混乱,并威胁国家安全。这些提案包括防止人工智能工具在住房和医疗保健服务方面歧视以及保护知识产权和就业的规则。

“正如加州在隐私问题上所见,联邦政府不会采取行动,因此我们认为,加州必须站出来保护我们自己的公民,”民主党议员、州议会隐私和消费者保护委员会主席 Rebecca Bauer-Kahan 表示。

随着联邦立法者拖延对人工智能的监管,州立法者填补了这一空白,推出了一系列法案,这些法案将成为事实上的全美法规。加州的科技法律经常为全国树立先例,这在很大程度上是因为全国各地的立法者都知道,企业要遵守跨州的拼凑法律可能具有挑战性。

据游说团体 TechNet 称,近几个月来,全国各州的立法者提出了近 400 项关于人工智能的法律。加州以 50 项法案领先各州,尽管随着立法会议的进行,这一数字有所缩小。

科罗拉多州最近颁布了一项全面的消费者保护法,要求人工智能公司在开发技术时采取“合理谨慎”的措施,以避免歧视等问题。今年 3 月,田纳西州立法机构通过了《ELVIS 法案》(确保相似声音和图像安全法案),该法案保护音乐家的声音和肖像不会在未经他们明确同意的情况下被用在 AI 生成的内容中。

北卡罗来纳大学教堂山分校技术政策中心执行主任 Matt Perault 表示,在许多州通过立法比在联邦层面更容易。目前有 40 个州拥有“三重”政府,即立法机构的两院和州长办公室由同一政党管理——这是自 1991 年以来最多的一次。

“我们仍在等待看哪些提案真正成为法律,但加利福尼亚州等州提出的大量 AI 法案表明立法者对这个话题有多感兴趣,”他说。

代表大型软件公司的游说团体商业软件联盟首席执行官 Victoria Espinel 表示,各州的提案正在全球产生连锁反应。

“世界各国都在研究这些草案,以期从中汲取影响其人工智能法律决策的灵感,”她说。

一年多前,OpenAI 的 ChatGPT 等新一波生成式人工智能引发了监管担忧,因为人们清楚地认识到,这项技术有可能颠覆全球经济。美国立法者举行了几次听证会,调查该技术取代工人、侵犯版权甚至威胁人类生存的可能性。

大约一年前,OpenAI 首席执行官 Sam Altman 在国会作证,呼吁制定联邦法规。不久之后,谷歌首席执行官 Sundar Pichai、Meta 首席执行官 Mark Zuckberg 和特斯拉首席执行官 Elon Musk 齐聚华盛顿,参加由参议院多数党领袖、纽约州民主党人 Chuck Schumer 主持的人工智能论坛。科技领袖们警告称,他们的产品存在风险,并呼吁国会建立护栏。他们还要求支持国内人工智能研究,以确保美国能够保持在该技术开发方面的领先地位。

当时,舒默和其他美国议员表示,他们不会重蹈覆辙,在新兴技术变得有害之前未能加以控制。

上个月,舒默推出了一份人工智能监管路线图,提议投资 380 亿美元,但短期内几乎没有针对该技术的具体保护措施。今年,联邦立法者提出了成立一个机构来监督人工智能监管的法案、打击人工智能产生的虚假信息的提案以及人工智能模型的隐私法。

但大多数技术政策专家表示,他们预计联邦提案今年不会通过。

“显然需要协调一致的联邦立法,”加州大学洛杉矶分校技术法律与政策研究所执行主任迈克尔·卡拉尼科拉斯说。

州和全球监管机构纷纷填补这一空白。今年 3 月,欧盟通过了《人工智能法案》,该法案限制执法部门使用可能造成歧视的工具,例如面部识别软件。

州人工智能立法的激增引发了科技公司对这些提案的激烈游说。这种努力在加州首府萨克拉门托尤为明显,几乎每个科技游说团体都扩大了人员规模来游说立法机构。

在立法机构于今年夏末结束会议之前,参议院或众议院通过的 30 项法案将提交各个委员会进行进一步审议。民主党控制着众议院、参议院和州长办公室。

“我们处于一个独特的位置,因为我们是全球第四大经济体,拥有如此多的技术创新者,”众议院成员、民主党人乔希·洛文塔尔 (Josh Lowenthal) 表示,他提出了一项旨在保护网络年轻人的法案。“因此,我们被期望成为领导者,我们也希望自己能成为领导者。”

其中三项法案旨在保护演员和歌手,无论健在还是已故。

美国演员工会-美国电视和广播艺术家联合会是演员和其他创作者的工会,它帮助起草了一项法案,要求制片厂在使用演员的数字复制品时必须获得演员的明确同意。该工会表示,在演员和人工智能公司发生引人注目的冲突后,公众对知识产权保护表示了强烈支持。

获得最多支持的法案要求对最先进的人工智能模型进行安全测试,例如 OpenAI 的聊天机器人 GPT4 和图像创建者 DALL-E,它们可以生成类似人类的文字或极其逼真的视频和图像。该法案还赋予州检察长起诉消费者损害的权力。

(《纽约时报》起诉了 OpenAI 及其合作伙伴微软,声称它们侵犯了与人工智能系统相关的新闻内容的版权。)

加州科技贸易组织进步商会批评了这项法案。该商会本周发布了一份报告,指出该州对科技企业及其税收的依赖,这些税收每年总计约 200 亿美元。

TechNet 加州和西南地区执行董事 Dylan Hoffman 在接受采访时表示:“我们不要过度监管一个主要位于加州但没有必要的行业。”

本文最初发表于《纽约时报》。

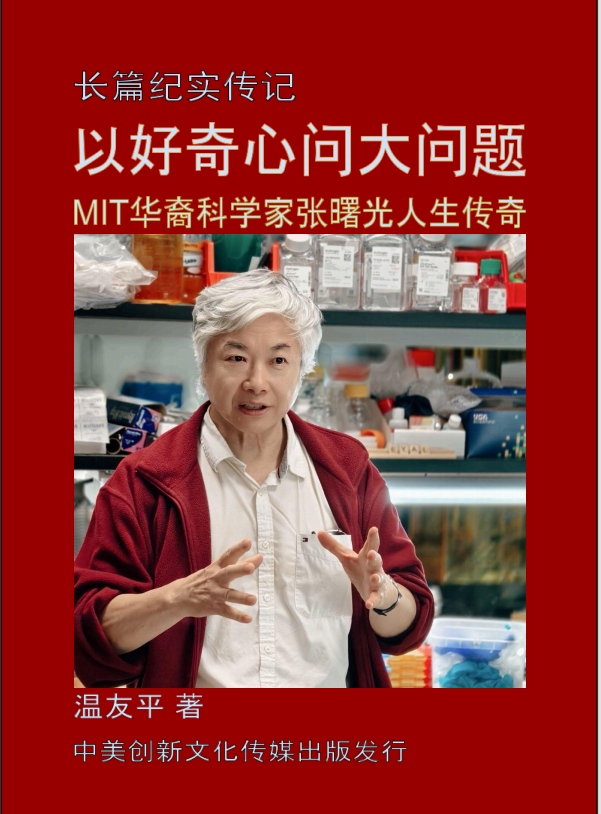

题图:2023 年 9 月 13 日,Meta 首席执行官马克·扎克伯格在华盛顿国会山参加由参议院多数党领袖查克·舒默主持的 2023 年人工智能论坛。HAIYUN JIANG/NYT

附原英文报道:

States take up AI regulation amid federal standstill

By Cecilia Kang New York Times,Updated June 10, 2024

Lawmakers in California last month advanced about 30 measures on artificial intelligence aimed at protecting consumers and jobs, one of the biggest efforts yet to regulate the new technology.

The bills seek the toughest restrictions in the nation on AI, which some technologists warn could kill entire categories of jobs, throw elections into chaos with disinformation, and threaten national security. The proposals include rules to prevent AI tools from discriminating in housing and health care services and to protect intellectual property and jobs.

“As California has seen with privacy, the federal government isn’t going to act, so we feel that it is critical that we step up in California and protect our own citizens,” said Rebecca Bauer-Kahan, a Democratic Assembly member who chairs the state Assembly’s Privacy and Consumer Protection Committee.

As federal lawmakers drag out regulating AI, state legislators have stepped into the vacuum with a flurry of bills poised to become de facto regulations for all Americans. Tech laws like those in California frequently set precedent for the nation, in large part because lawmakers across the country know it can be challenging for companies to comply with a patchwork across state lines.

State lawmakers across the country have proposed nearly 400 laws on AI in recent months, according to lobbying group TechNet. California leads the states with 50 bills proposed, although that number has narrowed as the legislative session proceeds.

Colorado recently enacted a comprehensive consumer protection law that requires AI companies use “reasonable care” while developing the technology to avoid discrimination, among other issues. In March, the Tennessee Legislature passed the ELVIS Act (Ensuring Likeness Voice and Image Security Act), which protects musicians from having their voice and likenesses used in AI-generated content without their explicit consent.

It’s easier to pass legislation in many states than it is on the federal level, said Matt Perault, executive director of the Center on Technology Policy at the University of North Carolina Chapel Hill. Forty states now have “trifecta” governments, in which both houses of the legislature and the governor’s office are run by the same party — the most since at least 1991.

“We’re still waiting to see what proposals actually become law, but the massive number of AI bills introduced in states like California shows just how interested lawmakers are in this topic,” he said.

And the state proposals are having a ripple effect globally, said Victoria Espinel, CEO of the Business Software Alliance, a lobbying group representing big software companies.

“Countries around the world are looking at these drafts for ideas that can influence their decisions on AI laws,” she said.

More than a year ago, a new wave of generative AI like OpenAI’s ChatGPT provoked regulatory concern as it became clear the technology had the potential to disrupt the global economy. US lawmakers held several hearings to investigate the technology’s potential to replace workers, violate copyrights, and even threaten human existence.

OpenAI CEO Sam Altman testified before Congress and called for federal regulations roughly a year ago. Soon after, Sundar Pichai, CEO of Google; Mark Zuckerberg, CEO of Meta; and Elon Musk, CEO of Tesla, gathered in Washington for an AI forum hosted by the Senate majority leader, Chuck Schumer, Democrat of New York. The tech leaders warned of the risks their products presented and called for Congress to create guardrails. They also asked for support for domestic AI research to ensure the United States could maintain its lead in developing the technology.

At the time, Schumer and other US lawmakers said they wouldn’t repeat past mistakes of failing to rein in emerging technology before it became harmful.

Last month, Schumer introduced an AI regulation road map that proposed $38 billion in investments but few specific guardrails on the technology in the near term. This year, federal lawmakers have introduced bills to create an agency to oversee AI regulations, proposals to clamp down on disinformation generated by AI, and privacy laws for AI models.

But most tech policy experts say they don’t expect federal proposals to pass this year.

“Clearly there is a need for harmonized federal legislation,” said Michael Karanicolas, executive director of the Institute for Technology Law and Policy at UCLA.

State and global regulators have rushed to fill the gap. In March, the European Union adopted the AI Act, a law that curbs law enforcement’s use of tools that can discriminate, such as facial recognition software.

The surge of state AI legislation has touched off a fierce lobbying effort by tech companies against the proposals. That effort is particularly pronounced in Sacramento, the California capital, where nearly every tech lobbying group has expanded its staff to lobby the Legislature.

The 30 bills that were passed out of either the Senate or Assembly will now go to various committees for further consideration before the Legislature ends its session later this summer. Democrats there control the Assembly, Senate, and governor’s office.

“We’re in a unique position because we are the fourth-largest economy on the planet and where so many tech innovators are,” said Josh Lowenthal, an Assembly member and Democrat, who introduced a bill aimed at protecting young people online. “As a result, we are expected to be leaders, and we expect that of ourselves.”

Three of those bills are designed to protect actors and singers, living or dead.

The Screen Actors Guild-American Federation of Television and Radio Artists, the union for actors and other creators, helped write a bill that would require studios to obtain explicit consent from actors for the use of their digital replicas. The union said the public has expressed strong support for intellectual property protections after high-profile conflicts between actors and AI companies.

The bill gaining the most traction requires safety tests of the most advanced AI models, such as OpenAI’s chatbot GPT4 and image creator DALL-E, which can generate humanlike writing or eerily realistic videos and images. The bill also gives the state attorney general power to sue for consumer harms.

(The New York Times has sued OpenAI and its partner, Microsoft, claiming copyright infringement of news content related to AI systems.)

Chamber of Progress, a tech trade group with lobbyists in California, has criticized the bill. It issued a report this week that noted the state’s dependence on tech businesses and their tax revenue, which total around $20 billion annually.

“Let’s not overregulate an industry that is located primarily in California, but doesn’t have to be,” said Dylan Hoffman, executive director for California and the Southwest for TechNet, in an interview.

This article originally appeared in The New York Times.